In the past I have referred to the OpenTelemetry Demo as a "mouth breather" meaning when I ran it locally my laptop fans powered on at full force and sounded like it was ready for lift off. To be fair that was a year-ish and many PRs ago.

My interest in the OTel Demo was piqued as a potential playground for developers learn how to explore and leverage telemetry to better understand parts of an unfamiliar system. In my past life as an SRE I often was troubleshooting issues in services written in languages I didn't even know or since infra is always guilty until proven innocent I learned how to drive my curiosity to gather data and facts to show no actually it was an application owners issue.

Enter the OTel Demo which goes beyond a simplistic singular sample application and includes 9 of the 10 language's supported and plenty of interesting interactions.

Below is my experience report on getting it up and running on my 2022 MacBook Pro with Apple M2 chip and 16GB of memory and if it matters running Sonoma 14.4.1. This is not intended as a tutorial to follow verbatim but to share lessons learned along the way and my field notes.

Choices I Made

- Podman as the container engine. This is what my team has standardized on and in a world where Docker is assumed default it does make my life a bit more difficult since I am unwilling to juggle both Docker and Podman locally.

- Running the Demo in Kubernetes not via docker-compose. Partially because I wanted K8s telemetry but mostly because I prefer using technology the way it was designed and intended and podman-compose is not officially supported/connected to Podman proper so I ruled it out on principle.

Approach #1 Minikube!

In the past I've used minikube to run and test deployments locally without much fuss and was naively expecting setting up the cluster to be the most straightforward part of this whole thing.

Minikube supports Podman as a driver (docs) by passing a custom flag on cluster creation. Yay!

minikube start --driver=podman --container-runtime=cri-o

Minikube + Helm Install

With a newly minted cluster running I was ready to deploy the whole set up and the docs recommend going with the Helm install. I am a rule follower so this is how I started

helm repo add open-telemetry https://opentelemetry.github.io/opentelemetry-helm-charts

helm install my-otel-demo open-telemetry/opentelemetry-demo

and if everything went according to plan I would have a slate of processes up and running and things would be hunky dory

instead I was greeted with a terminal full of ErrImagePull, ImagePullBackOff, and ImageInspectError.

"UGH I KNEW HELM WAS GOING TO BE A PROBLEM" was my first thought. I was already anxious just helm installing a bunch of charts...what if something went wrong? All I had in the open-telemetry/opentelemetry-demo repo was a giant K8s YAML file, no charts (as of this writing I then found the charts over in open-telemetry-helm-charts/charts/opentelemetry-demo). Untangling what is wrong, where, and parsing helm file templates is a pain that I wanted to avoid if at all possible.

After a deep cleansing breath I took a closer look at my terminal and noticed that Off the shelf open source components like Jaeger, Grafana, Kafka, Prometheus and Redis had been pulled down no problemo. All the errors were scoped to the custom services...huh.

I described one of the pods and saw this event message:

Failed to pull image "ghcr.io/open-telemetry/demo:1.6.0-paymentservice": writing blob: adding layer with blob "sha256:193dce5f4a0cf83f577b68c70b4e91199f39f88aac032b21359a13314f7dc6f5": Error processing tar file(exit status 1): operation not permitted

"What even is ghcr.io??" a quick Ecosia search enlightened me that ghcr = GitHub Container Registry. OK so this new-to-me registry coupled with image pull issues made me suspect an auth/login issue.

I skimmed the docs for Authenticating to the Container Registry which told me I needed to create a Personal Access Token (classic) to publish/install/delete packages. Easy enough. Scrolling down further was a Note that made me chuckle/cry....by default the token scope is set too broad (aka violating the Principle of Least Privilege) and the recommended workaround was to hack the URL 🤦♀️.

OK with my PAT in hand (technically safely ensconced in my password manager) I consulted the Minikube docs about Interacting with Registries which supports registries from Google, AWS, Azure and Docker out of the box. Nothing about this GitHub Container Registry....

Same thing with the guided wizard, no mention of configuring ghcr.io

$ minikube addons configure registry-creds

Do you want to enable AWS Elastic Container Registry? [y/n]: n

Do you want to enable Google Container Registry? [y/n]: y

-- Enter path to credentials (e.g. /home/user/.config/gcloud/application_default_credentials.json):/home/user/.config/gcloud/application_default_credentials.json

Do you want to enable Docker Registry? [y/n]: n

Do you want to enable Azure Container Registry? [y/n]: n

registry-creds was successfully configured

Abandoning the docs I found my next hint in this SO post "It's because you did not create a docker registry secret". Hmmmm. OK fine I can do that.

Back to the docs with Pull an Image From a Private Registry but immediately sidetracked by step one being to docker login. I'm Podman'ing! (if you're a Podman user get used to saying this to yourself A LOT). I skipped to the part showing what the config.json should look like

{

"auths": {

"https://index.docker.io/v1/": {

"auth": "c3R...zE2"

}

}

}example config JSON from the docs

I figured creating the same file with the ghcr address would do the trick and obviously replaced auth value with my personal access token.

{

"auths": {

"https://ghcr.io": {

"auth": "c3R...zE2"

}

}

}example config JSON with ghcr address

From here followed steps under Create a Secret Based on Existing Credentials which purported to create a Secret of type kubernetes.io/dockerconfigjson to authenticate with a registry. Alright, was feeling good by this point!

kubectl create secret generic regcred \

--from-file=.dockerconfigjson=ghcr-config \

--type=kubernetes.io/dockerconfigjson

Next I needed to actually update the K8s YAML to use this secret like in the example below so at this point I said a not-so-fond farewell to Helm and would rather take my chances editing the giant all-encompassing K8s YAML in the otel-demo repo.

apiVersion: v1

kind: Pod

metadata:

name: private-reg

spec:

containers:

- name: private-reg-container

image: <your-private-image>

imagePullSecrets:

- name: regcredMinikube + Kubectl Apply

I started to get overconfident right about here thinking THIS has got to be the fix! I updated the Deployment section for the ad service to include this new regcred Secret and even though it felt so wrong to run a raw kc apply I did it

kubectl apply --namespace otel-demo -f kubernetes/opentelemetry-demo.yaml

aaaand got the same issues with images. Was it the JSON config that was weird? I should have figured crafting it by hand would be error prone. Scrolling down in the K8s Secret docs there was an alternative approach via the CLI. Which meant I could get rid of the file with my access token in plaintext woo!

so I ran a quick delete on the existing K8s resources to clear everything out and created my Secret.

kubectl create secret docker-registry regcred \

--docker-server=https://ghcr.io \

--docker-username=paigerduty \

--docker-password=$PERSONAL_ACCESS_TOKEN \

--docker-email=paigerduty@chronosphere.io

This did not entirely work. On the one hand it did show me that GitHub Container Registry is in fact a docker registry just not the Docker registry aka dockerhub (which DUH in retrospect, but my brain associates "docker" with Docker the company/dockerhub). Anyways this did get me to properly set up ghcr creds with podman so bully for me!

Oddly enough the custom service images were pulled but now the open source images were reporting ImageInspectError. What?!? There's a chance I borked the K8s YAML editing it by hand, and my IDE was throwing 803 problems with the YAML as is so I really didn't have the time/energy/desire to fix them up and figure out if/where I messed up indentation.

At this point I was pretty frustrated and stepped away from the computer to snuggle my cat Norman, watch Abbott Elementary and sip on a lassi.

Approach #2 kind

It was a new day - the sun was shining, the birds chirping, and it was time to get back to it.

Despite getting Minikube to a place where it could auth and pull images from the registry I was mystified by the new ImageInspectError and thought "what if for funsies I tried this with a kind cluster?" Besides, I didn't have a great reason or affinity for minikube in the first place other than some passing familiarity.

Despite the name standing for Kubernetes IN Docker, support for Podman was called out on page 1 woo! There was even a whole page on setting up kind with rootless Podman (be still my heart!).

KIND_EXPERIMENTAL_PROVIDER=podman kind create cluster

kubectl cluster-info --context kind-kind

And suddenly a beautiful cluster appeared! Time to deploy the Demo

kubectl create ns otel-demo

kubectl apply --namespace otel-demo -f kubernetes/opentelemetry-demo.yaml

I watched the k9s overview for the otel-demo namespace and smiled as every pod started successfully pulling images down off-the-shelf OSS or custom. YES ITS HAPPENING!

....

And then some pods got stuck in perpetual Pending, running a quick describe I saw the reason as "waiting for kafka"...so off to describe the kafka pod and discovered that it couldn't be scheduled because there was no node with enough available memory.

Now that is a problem with a quick fix! I needed to bump up the resources of the podman VM, I wasn't sure by how much but figured it wouldn't take more than a couple tries and was trivial to do.

First Try

podman machine stop

podman machine set --cpus 2 --memory 2048

podman machine start

Second Try

podman machine stop

podman machine set --cpus 6 --disk-size 150 --memory 6000

podman machine start

The first try ended up with similar results and I probably went a bit overboard with the second try but I really really REALLY just wanted to get this up and running.

If you've read this far, you also deserve to bask in the glow of a terminal full of Running pods, while I had to wait an excruciating 4m30s in real time, here's a sped up version

Live screengrab of k9s terminal updating otel-demo pod status in realtime until everything is in Running state

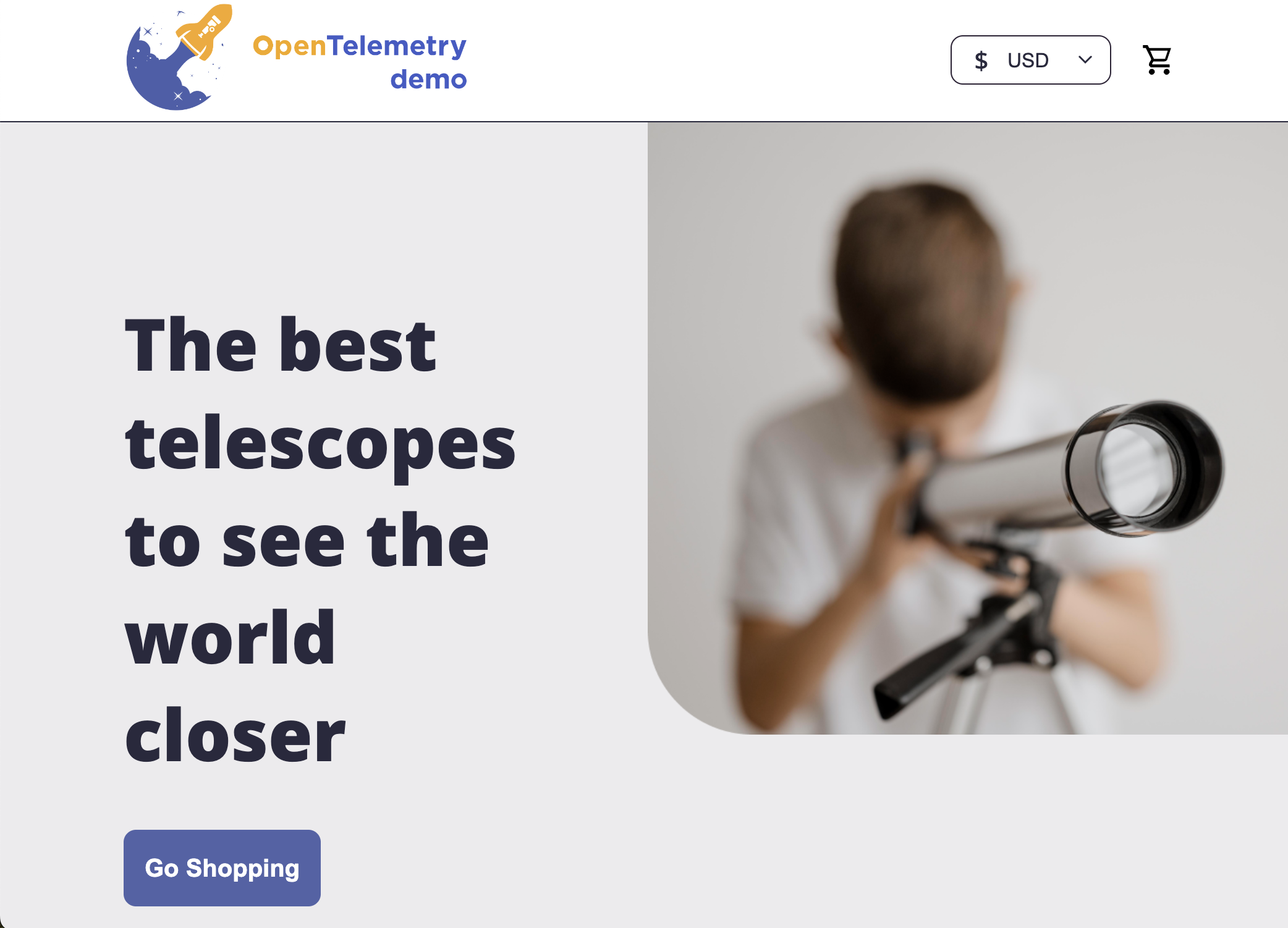

At this point all that was left was port-forwarding to the frontendproxy and firing up a browser and hit http://localhost:8080/ where I was greeted by the Demo app home page, naturally a telescope storefront 🔭

Huzzah! And now the only thing left was to remember why I was trying to spin this up in the first place 😆

Takeaways

- Docker is assumed as the default for everything, however I am a fan of having choice in technology and am glad Podman exists and is gaining ground

- K9s really helped me get a quick birds eye view of what was going on with the cluster and pods

- My first instinct was to check the logs (idk why) but obvi for services not able to spin up or stuck getting k8s events with a describe always held the answer

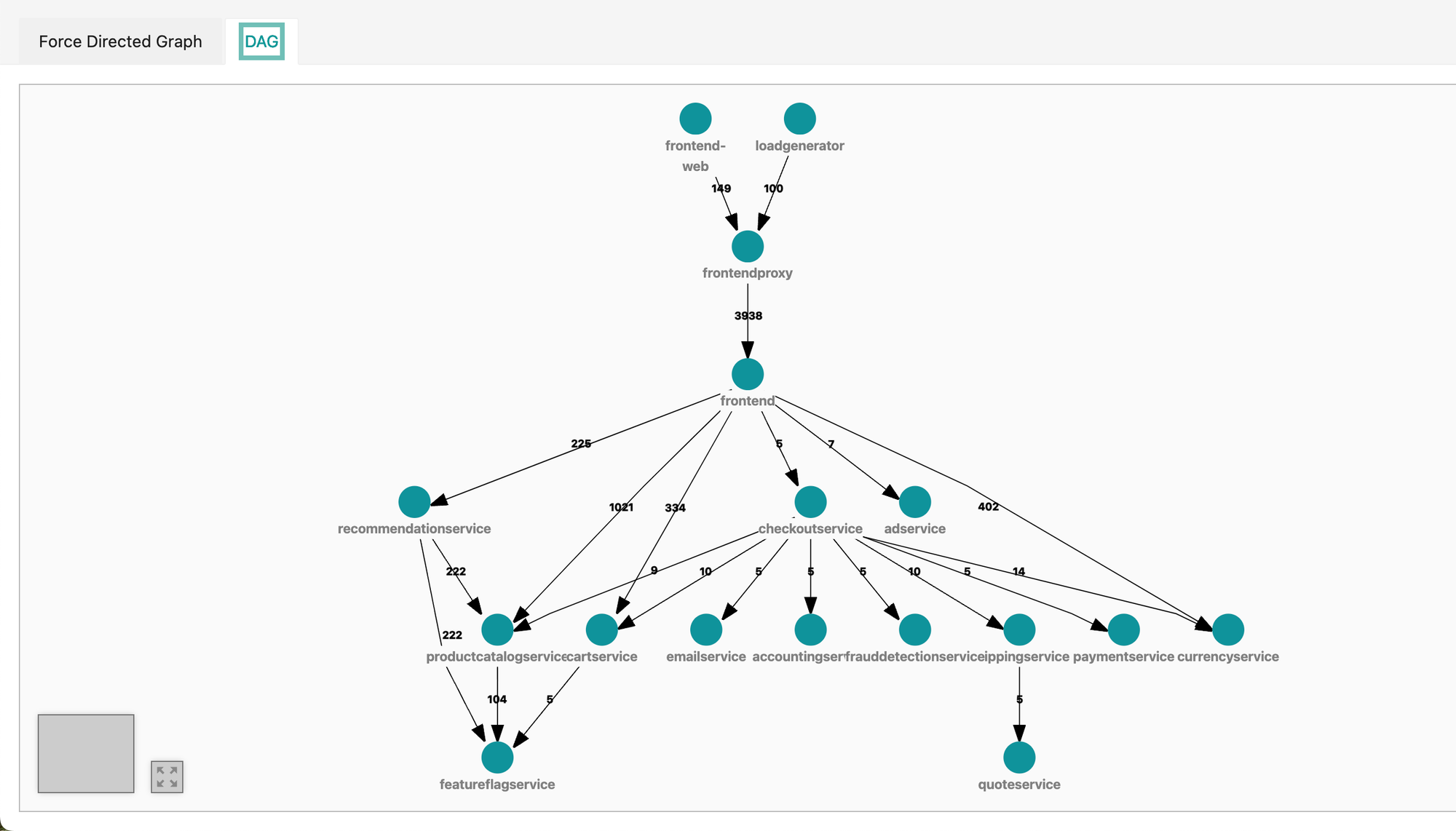

- The DAG view in Jaeger was a great way to quickly suss out the architecture

CAT TAX

Norman, my beautiful cat, who doesn't know what a kubernete even is

Comments